I’ve been playing a lot lately with embeddings and semantic search implementations and strategies so I’ll present a bit my learnings - tailored around search systems already using ElasticSearch as the engine behind it.

Some terms

Let’s start with some introductions. What’s ElasticSearch? You can view it as a manager of Lucene instances. (open-source search engine) Each index is running under the hood a Lucene instance and through this setup you can query your data. It’s what Kubernetes is for container orchestration (of course, keeping proportions for a very simplified overview).

What comes out of the box from ElasticSearch? Well, you can index, analyze and search your data. Your documents are stored in indices; each index has its own mapping definition which you can set in order to analyze your data properly. It has support for lots of possible search scenarios such as: fuzzy searching, synonyms, weighted searching over sent terms, aggregations (facets) (ideal for layered navigation filters). As a ranking function, it uses BM25, an extension of TF-IDF (Term Frequency-Inverse Document Frequency) model and is designed to improve upon some of the latter’s limitations. The BM25 ranking function computes a score for each document in a collection based on the terms present in the document and their frequency in relation to the entire collection. Make your own research and play with the available config params.

So… all in all, why do you need something else? These ranking functions may seem to do their work already, right? Well, due to a series of factors such as legacy search systems, regular users have been trained to combine multiple keywords in their search queries in order to get relevant results. So, searching for something in natural language will usually be expected by the processing system to mainly contain only keywords for best and much more relevant results.

Eg "I want to buy sport shoes for running activities" becomes running shoes

So, what are your options in case you want to process inputs in a different way? You’ll need to vectorize both your data (when indexing) and your users’ search queries.

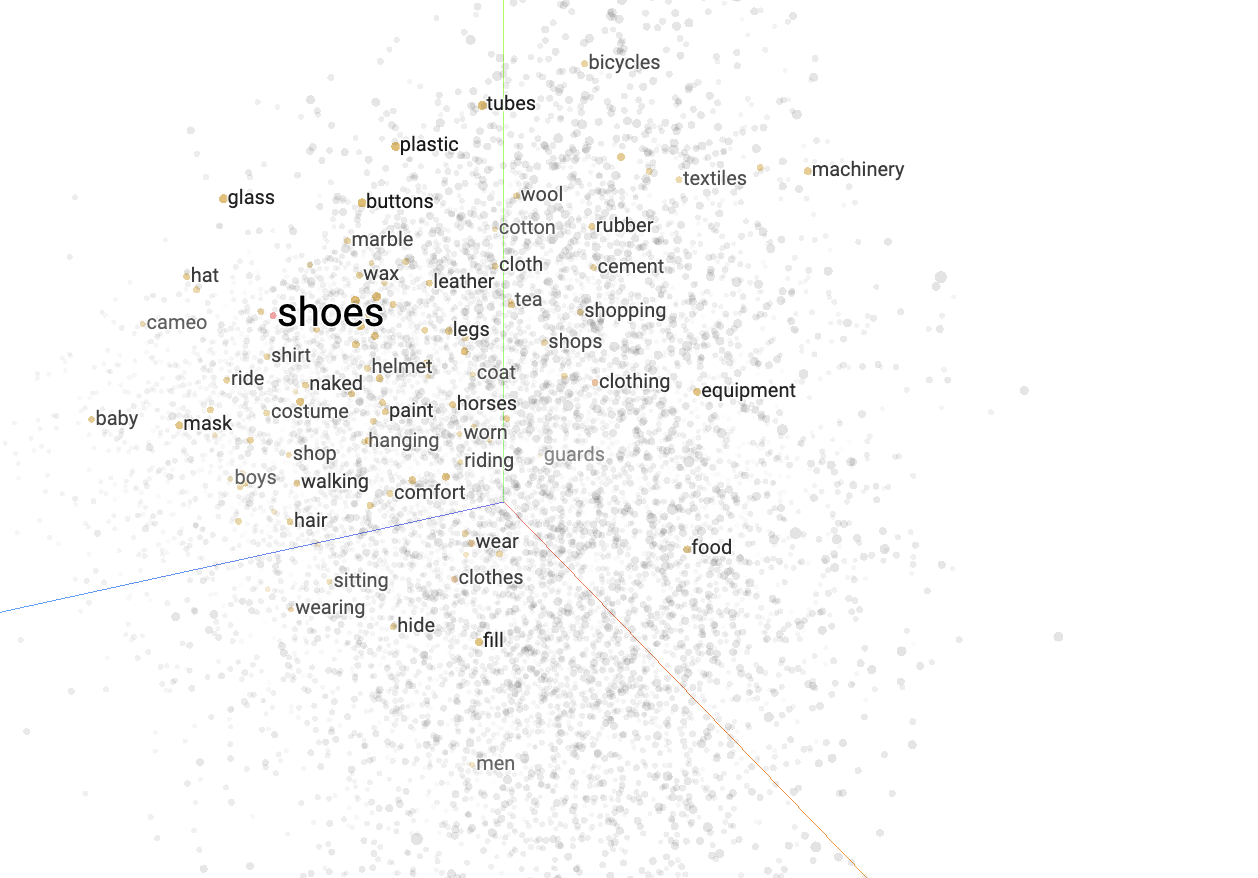

Embeddings are numeric representations of the input data (a string, image, audio etc) converted to number sequences which will help computers to easily understand the relationship between each item. They’re going to be represented in a vectorial space - so, making computers really understand the semantic meaning translates actually into calculating distances. Small distances suggest high similarity. This opens the box for a wide set of use cases:

- search engines with NLP

- recommendation systems

- clustering items

- classification

- anomaly detection

Semantic search refers to the meaning of words and their relationships, in the process of retrieving information from a database. This aims to understand the context, intent, and meaning behind a user’s query, going beyond the literal matching of keywords. This can be achieved using Natural Language Processing (NLP) and machine learning algorithms and models which often employed to understand the semantics of text.

Hybrid search combines multiple search methodologies or approaches to provide a more comprehensive set of results. In practice, it means combining both semantic search with a basic keyword based search, ranking items using both functions.

Flows

Simplified flow for indexing:

- prepare index mapping to expect dense vectors

- receive documents (indexing)

- map data to fit your mapping (optional)

- embed your data (either through an external provider such as OpenAI or VertexAI or an open NLP ML model) and index it

- you can combine the same for representing images associated with your items (you can extract for eg labels using a different model from each image and combine them together)

Flow for searching:

- embed search query ~ transform the search query into relevant vector representations

- perform a semantic query by comparing vectors

- for hybrid search, also add the keyword based searching logic

- combine scores

Mapping definition

As mentioned, you need to define a field of type “dense_vector”. Pay attention to the dimensions of your vector - it needs to match the output value from your model. (i.e OpenAI - 1536; VertexAI - 768)

|

|

Executing a semantic query with approximate KNN

Given your search query, you can consider the following example:

|

|

This will bring back the closest k neighbors to your input vector. You can also use a script_score function, but for performance reasons you might want to avoid this, especially for mid/large sized indices. Benchmark everything before taking a decision.

Combining keyword search with semantic search

The idea is pretty basic - ES supports to execute the above mentioned queries altogether in the same request. Let’s take a look over a query example:

|

|

The knn query results are combined with the regular match query’s through a disjunction (logical or).

Tips n tricks

For KNN queries, as advertised also through ES’ documentation, always prefer dot_product over cosine as the similarity function if you’re aiming for speed. But it also depends on your catalog size as well. Also, make sure your data nodes have enough memory. ES uses HNSW under the hood - a graph representation of your vectors which is loaded in memory. This means that you need to scale your cluster accordingly so you won’t run out of juice.

In order to combine queries as described above, a good trick is to boost your queries. So, the final score to get the most relevant results would go as:

|

|

You can do so by adding a boost field within both query and knn in your ES query object.

Additionally, you might want to use RRF - reciprocal rank function once it will become stable.

Another observation to be added here is that the knn function supports a list of queries, so you can use it with multiple dense vector fields, supposing that you’ve embedded images inside a field, product description in a different one and so on.

Alternatives

Well, I’ve tried with different solutions such as Weaviate or Milvus, but the overall search performance was not that good or they were lacking hybrid search techniques for term based matching. Also, ES is well known to scale as long as you have enough hardware available - there’re lots of studies and use cases with petabytes-sized indices used mainly for logging and observability.