Recently, I’ve been working on an old hobby project as I needed to rehost it somewhere else. This website serves something like 10k unique users / day and it’s completely community driven - our users interact on the website through comments, forum posts, a chat widget (self-stored) and the content they’re providing (movie subtitles). I’ll just guide you through the entire process.

Initial landscape

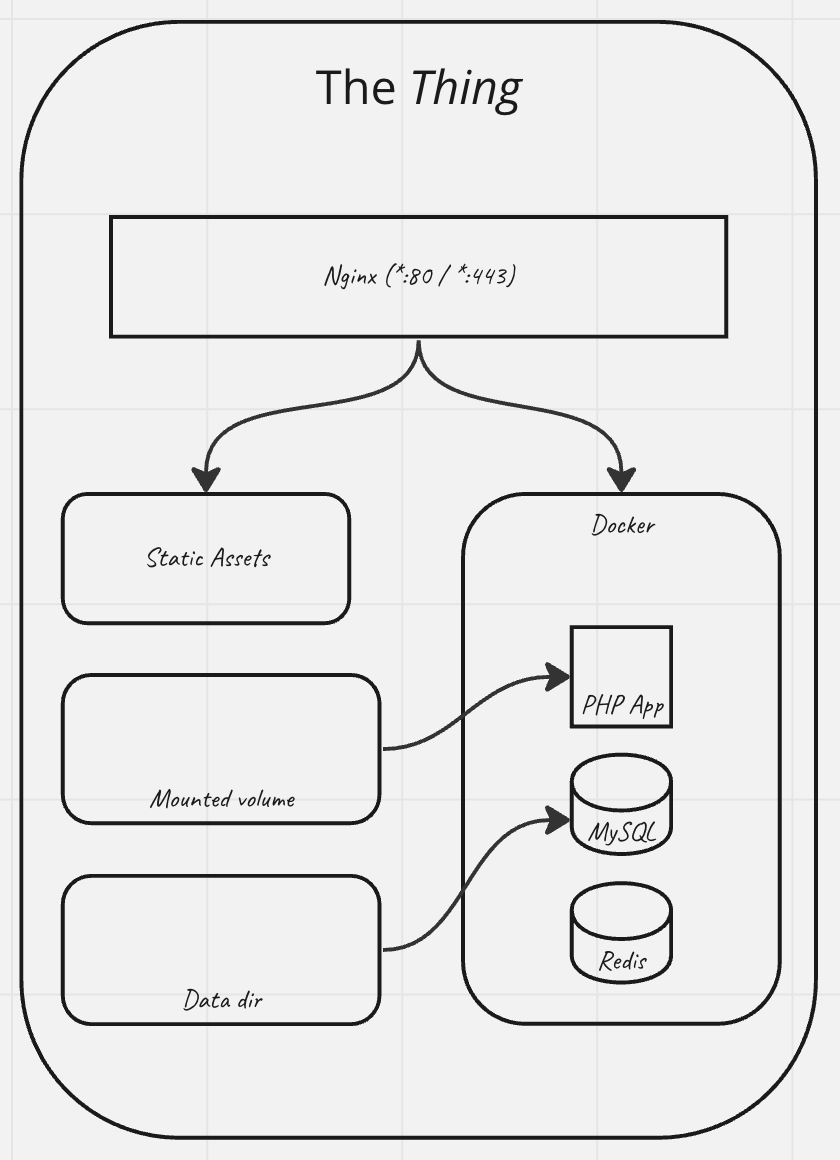

The initial architecture was pretty simple:

- an old PHP app still working on PHP 5.4.x (?) on centos 5; sql database - MySQL 5-something;

- hosted on a dedicated server - colocation costs got up to 40 EU/month; we were completely aware that self-owned hardware can become a headache especially when it gets old; the old server had something like 4GB of RAM and a Core2Duo - it was 10 yrs old, the storage drives (HDDs!) were changed a few years ago

- traffic stats in terms of bandwidth: something around 1 TB/month; mostly served through cloudflare, with a hit rate of 85%

- overall loadtime for the website were decent enough - 300-600ms / page

- caching was implemented on memcache

- the website was initially written somewhere around 2006; I started contributions around 2008, did a redesign in 2009, then in 2010 a complete rewrite

The plan

Since my time is limited and I haven’t been exposed to PHP for already a few years, I was a little bit afraid of digging over dead bodies. Plus, I wanted to use a more recent stack in order to host the solution, so this was my initial draft:

- containerize the app and use latest versions for the database layer

- ditch memcache and replace it with redis

- migrate data over the new database and apply various needed fixes when/if needed

- rewrite the entire solution in golang so it could be very resource-friendly as opposed to PHP; this should be an API and the rendering part should be developed using nextjs (SSR is needed)

- dump trailer files as they were sucking up a lot of storage and produced a lot of traffic which wasn’t cached at all (due to CloudFlare’s streaming data policy) - switch over to YouTube embeded trailers instead

- remove the self-stored chat and migrate users to a private discord server; bonus - discord also has a pretty decent mobile app

I’ve started with the first step and it went on pretty well. No headaches whatsoever in migrating my data over MySQL 8. Then work kicked in over some projects we had to deliver quite urgently, so around the end of December, I had close to no spare time over my hobby project. Things got even tighter as we had a hardware failure around January so I needed to decide weither we close the website, or I should revise my plan entirely. I chose to do the latter, adding some extra steps:

- try to migrate the app over to PHP 8.2; the codebase was ancient, as stated, but pretty simple and straight forward; back then, I was smart enough to split the logic pretty well, so few libraries had to be patched

- benchmark performance on PHP 8 and decide if it’s worth or not; to be noted that the new hosting should be pretty cheap (as the project doesn’t feature any ads nor monetization, I’m not interested in getting any buck out of it) therefore the resources can become pretty important in the overall scheme

- make the project composer-compatible and install some packages in order to finish up the memcache->redis transition

So, let’s see how it really went on.

PHP8 upgrade

As mentioned above, I have written this entire mini-framework back in 2010. It’s been a while, but it has proved to be pretty stable. I haven’t noticed any hiccups through its lifetime and our data wasn’t hacked until now. When upgrading to PHP8, I had some small headaches to handle - mostly, some changes on the mysql lib as it was expecting different parameters to be passed around. But, as the database layer was already wrapped into its own class, it really took me somewhere around 30mins. Woo! The website was loading on my local env. After extracting some urls, I was able to push some requests to it with siege. Pretty good, I was able to handle 100 RPS with only 64MB RAM allocated to the FPM container. Pretty good as there was no caching at all and the MySQL db was actually taking the entire burden.

Adding composer support was again pretty easy. Just ran some commands, had the vendor/autoload.php ready, I’ve just added it as well in my include_once sequence and carried on. Ready to bump the caching layer now.

I’ve googled a bit to see what packages are out there for PHP and Redis - I was pleased to notice that predis/predis is still widely used (I was using it before in my PHP days) so I went for it. As mentioned before, I already had the logic split into different libraries, so switching the caching layer from memcache to redis was yet again simple. As the data I’m caching from the database layer is pretty simple (associative-arrays mostly), I’ve replaced the un+serialize calls with json encode/decode. All went well.

For other deprecated calls I wasn’t able to find out, I’ve used RectorPHP, which proved to be both easy to set up and also useful. Patched another batch of files and my overall trust in my decisions grew a lot.

Time to do another batch of tests - it went up over 400 RPS. Excellent! Time to migrate now.

Production setup

As mentioned, I was using docker locally to containerize the app. I also wanted to use the same approach for the production environment but with different defaults. And the nginx webserver shouldn’t be exposed through docker, but rather run directly on the host. All the PHP requests can be passed to the FPM container through the locally exposed port (I know, sockets are faster, but they aren’t a solution for my needs). The app -> mysql and app -> redis communication will go through the docker network, so no need to expose anything.

A brief diagram:

On top of that, I also made-up some quick bash scripts for setting things up. The most important thing is the initial setting of ufw as you don’t want to expose anything wrong outside your machine (ofc, you can configure that also at the firewall level offerred by any cloud provider out there through ingress/egress tinkering):

|

|

It went well quite fast. Migrating the database and clearing it up took a little bit longer, but the migration went on just fine. I’ve also put up beforehand a cutover plan so I wouldn’t miss anything: (copy/paste from my notes app)

|

|

All-in-all, this phase took 4 hours to complete. After I ran those tests, I was sure I’m ready to go with the new environment and softly retire the old server.

Profiling the thing yet again

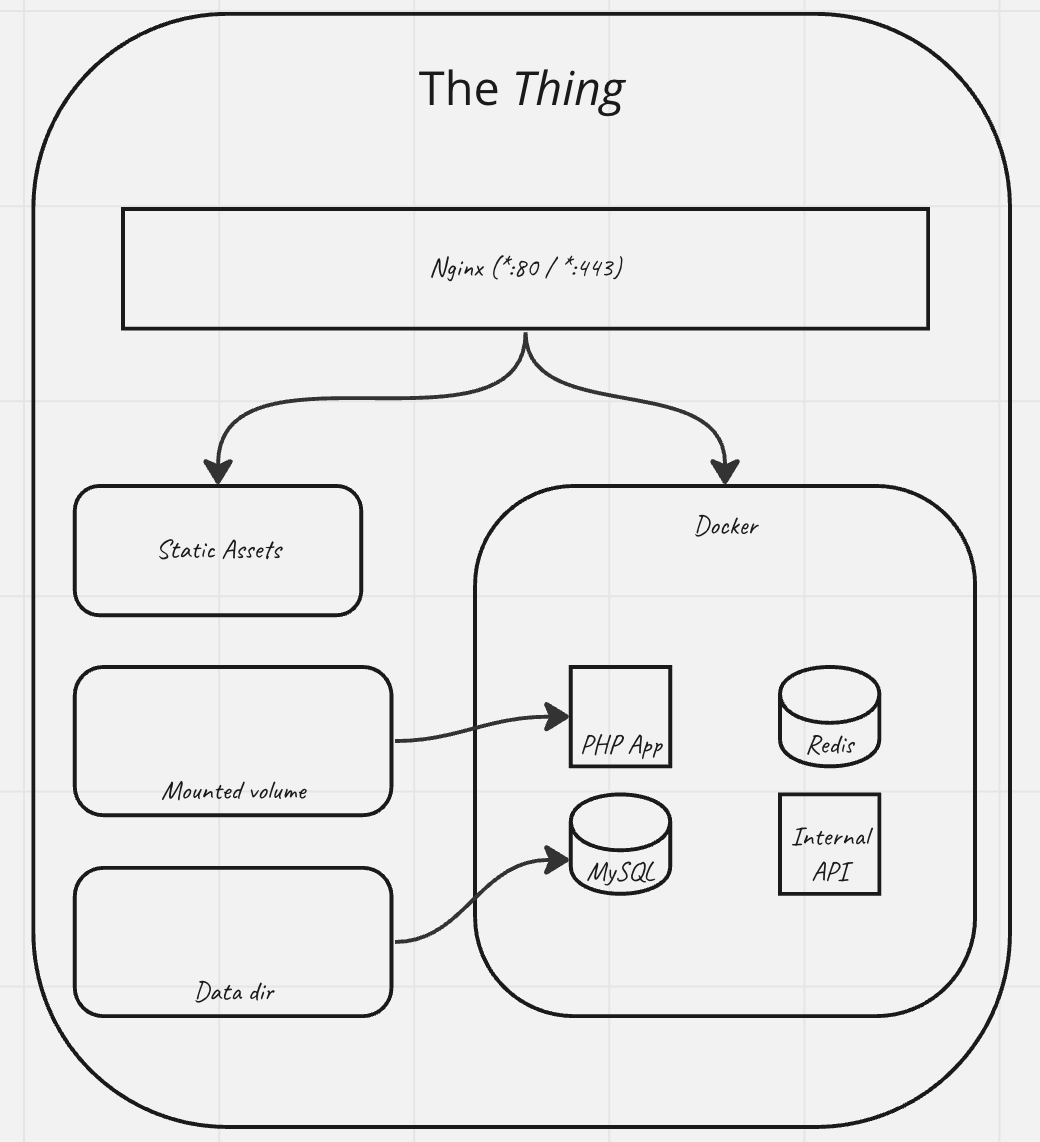

I’ve noticed that some edges were taking way too much time. Mostly, external requests used to enrich local data - such as scraping iMDB / themoviedb (added later) / some romanian website. Since they were made in PHP using the old codebase, there was no parallel calls support and I didn’t even bother to see if it’s worth doing it in PHP. So I went the other route, created an internal API in golang which did all the above. Found some great libraries and moved pretty fast on creating an API to meet my needs. On top of this, I’ve even replaced the trailers (initially hosted by our machine - 20k+ files, most of the traffic came from streaming them directly) to use Youtube entries. Most movies are available there, so it seemed to be the logic decision to make.

The internal API is not exposed (yet). It’s only consumed by the PHP app when it needs to hydrate data later on saved in the MySQL database. It went down from 3 sec to 300ms.

Monitoring and tweaking configs

I left the project on its own for the next 2 weeks. I had no errors logged whatsoever. The stats of the VPS were just fine, but I’ve noticed spikes on the database layer now and then; after enabling the slowlog, I’ve noticed the offending queries. So I’ve just switched back to the dev mode in order to optimize them - added a few missing indexes and ditched any LIKE '%[term]%' calls for term searching; instead, went on with a fulltext index on the fields used for search. Great success! A regular search went down from 500ms to less than 100ms. MySQL is great if you also tune up the default stopwords to match your case, but even the defaults provide excellent outputs.

The docker-compose manifest

As mentioned, I’m managing things through docker. The docker layer is using a docker-compose manifest file which is pretty simple:

|

|

And the MySQL conf:

|

|

Administration and backups

There’re several things to consider - backups and how you handle updates. Notice that solution updates aren’t supposed to happen too often, the codebase was nearly untouched for 10 years, so I didn’t need to go too crazy over it. I’ll just provide some snippets for backing up mysql locally every day, keeping only the last x copies; the server also gets backed up daily, so in case of a major outage, I can use the snapshots to restore it.

|

|

|

|

Added these in the crontab of my local unpriviledged app user on the host and I could sleep quite tight.

A deployment can be done manually through another simple sh script. It’s easy to set-up a webhook to this automatically, but considering the number of changes I’ve been pushing over the last 10 years… thought it made no sense. So I just put up together a Makefile:

|

|

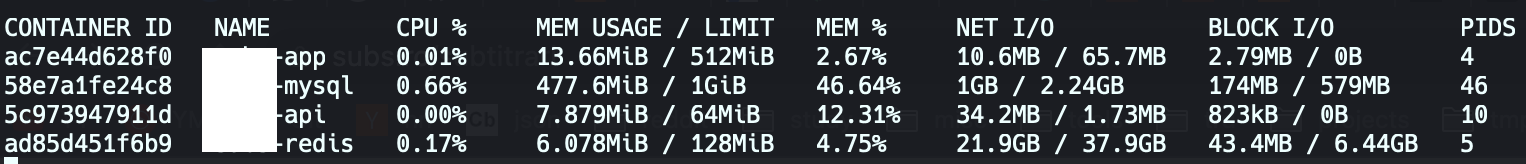

Performance went on pretty steady after tweaking a bit the mem limits for each container.

Revamping the interface

Time went on, a few weeks have passed, and I’ve noticed no errors as mentioned above. The environment was stable, so I could just go on with revamping a little bit the user experience. The old website had a custom css, no framework was behind it, and it was lacking any support for responsively displaying the info. I’m no frontend guy, but a dear friend of mine kept on telling me very nice things about TailwindCSS and its utility-first mindset. I hopped on over that train and I’ve ported the entire interface to Tailwind in under one week - it was a fun ride as I’ve only read through their documentation. The traffic we’re getting is somewhere around 20% from mobile devices, so I can just assume some users must be very happy now as they don’t have to zoom in.

Recap

This was indeed a fun ride, concluded quite fast, in under 1.5 months through my weekends and rarely through some nights when I felt like doing some work just to ease out my insomnia.

The result is quite pleasing from many aspects:

- one-man job; the website is way more faster even though it’s using less resources

- switched to an infrastructural model which allows daily backups without any headaches on a fairly cheap cloud provider

- with only 3 vCPUs and 4 GB of RAM, I can handle that entire stack and still get a handful of resources still available

- reduced costs with something like 60 to 70%

- the interface has been revamped entirely

- the stack is now way much modern

- I’ve followed my principles of using the right tool for the job without wasting my developed-taste of pushing golang along the way; and I have also avoided rewriting the entire thing in golang as it would have taken way too much time, time which I wouldn’t have had with a baby ready to get born

- using no framework can be useful in certain situations and keeping yourself very close to the barebones approach; if this would have used let’s say Symfony 1, upgrading the entire stack would have required a full rewrite for sure

- PHP’s BC between major versions is really neat